"Do You Have a Safe Word Yet?" A Call to Arms Against Deep Fake Voice Attacks

In the digital age, we've seen a steady evolution of threats, but none perhaps as chilling as the rise of deep fake voices and videos. Malicious actors can, with relative ease, use voice technology to mimic someone's voice and use it in criminally exploitative ways—from convincing others to take potentially dangerous actions, to making fraudulent payments, or opening gaps in security. This is a threat too severe to overlook. So, how do we safeguard ourselves in a landscape where our ears can't always be trusted?

Trusting the Voice You Recognize

Let's paint a picture. You're at your desk, engrossed in work, when your phone rings. The voice on the other end sounds unmistakably like your superior. They sound hurried, perhaps even a little stressed, and they're requesting that you authorize an urgent payment to a new vendor.

In the past, recognition of their voice might have been all you needed. But in the era of deep fakes, recognition isn't assurance. So, you calmly ask, "Before I go ahead, can you give me your passphrase?" There's a brief pause. If the caller is truly your supervisor, they'll promptly provide the pre-determined passphrase. If the caller falters or can't answer, it's a glaring red flag.

Shielding Against Deception: The Challenge-Response Tactic

The challenge-response tactic is potent in its simplicity. In the face of technologically advanced threats, it's tempting to turn to equally high-tech solutions, like tech-driven authentication codes, to verify identities. However, consider the situation of the employee and their supervisor, as described above. The employee, not wanting to disappoint their supervisor, feels pressured to act quickly. The urgency in their supervisor’s voice may tempt the employee to bypass time-consuming protocols to swiftly accommodate the request.

Social engineering, a tactic used by malicious actors to pressure someone into hasty, fraudulent actions, often exploits these hurried situations. Here, a person, feeling pressured by perceived urgency, might neglect protocols to fulfill the request. In such high-pressure scenarios, a quick vocal challenge — asking a supervisor to provide a predetermined passphrase or safe word — becomes an immediate and easy-to-deploy defense against potential security breaches.

MFA and Voice Verification: A Layered Approach to Security

Multi-factor authentication (MFA) is a security tool that requires users to provide two or more levels of verification to gain access to a resource, such as an application, online account, or a VPN. Traditional MFA might involve something you know (a password), something you have (a security token, mobile device, or a temporary code from an authenticator app), and sometimes something you are (biometrics, like a fingerprint).

Drawing a parallel, the voice challenge-response tactic can be viewed as a unique twist on the 'something you know' aspect of MFA. Just as you'd need a correct password to access an account, you'd need to know the correct challenge-response (“passphrase”) to verify your identity over the phone. Having an agreed-upon safe word acts as an additional layer of security, ensuring that voice interactions remain authentic and trusted.

A Call to Leadership: Ensuring Authentic Communications

The threat of deep fakes isn't just about voice imitation; it's about ensuring authenticity in communications. By making challenge-responses a standard practice in the workplace, leaders in every industry can cultivate an environment where the integrity of communications is valued and maintained.

Adopting voice verification in the workplace necessitates a dedicated, holistic change effort within an organization. Company leaders must educate employees at all levels about the threats posed by deep fake voice technology and the potential impact on the company should a breach occur. This approach ensures that the entire organization has 'buy-in' to the practice. It also establishes a level of trust, so employees understand that requesting passphrase verification isn't about undermining authority. As in the case of the employee and her supervisor, described earlier, employees often feel increased pressure to fulfill requests from those in supervisory roles.

However, the dangers of social engineering aren't confined to interactions between employees at different hierarchical levels. Consider a scenario where a finance executive is instructed by a 'voice peer' to disclose sensitive financial details. In our increasingly digital age, everyone is a potential target; therefore, educating all employees about cybersecurity risks is essential. A quick challenge-response authentication practice might be the only barrier between safety and exploitation.

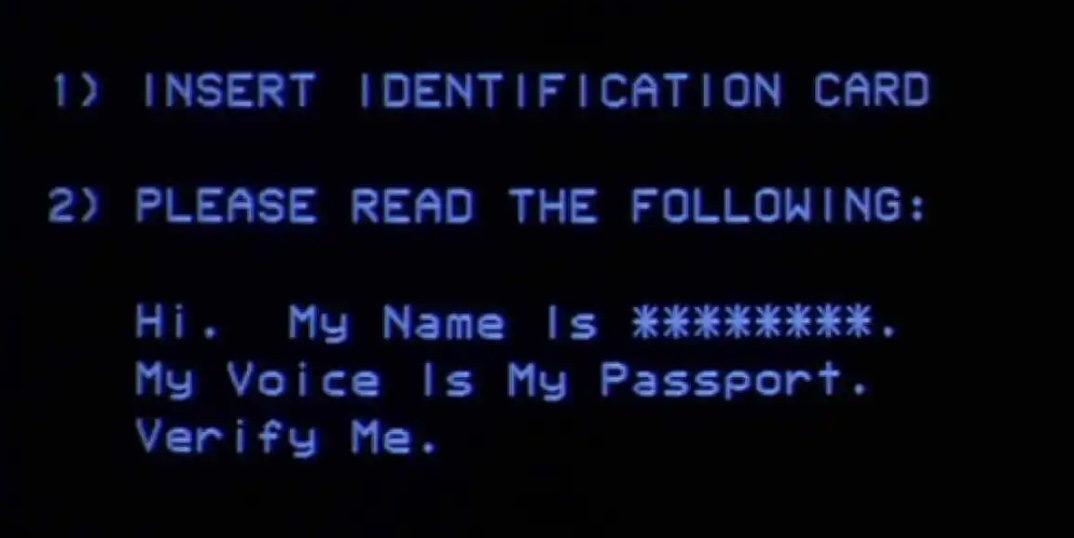

Voice Authentication: From Silver Screen to Real-World Concerns

The 1992 film Sneakers spotlighted the idea of voice authentication as a security measure. At one time, such an approach might have been considered cutting-edge and relatively secure. Fast forward to today, and with the proliferation of deep fake voices, that very premise has become questionable. Using voice alone as a form of security authentication now feels like treading on thin ice in today's cybersecurity landscape.

The Ongoing Digital Tug-of-War

Reflecting on this, an intriguing query arises: Could a deep fake voice be sophisticated enough to bypass a biometric voice reader? As of early 2022, while deep fake voices had advanced rapidly, the ability to consistently trick high-quality biometric voice authentication had not been fully realized. Biometric voice reader systems assess more than mere tonal quality; they delve into rhythm, pitch, cadence, and the unique way sounds are articulated.

However, it's a digital tug-of-war. As deep fake technologies continue to evolve, so must biometric solutions continue to become more sophisticated in order to outsmart them. It's a poignant reminder that in security, there's no silver bullet. Implementing layered security measures, like challenge-response mechanisms, are critical in this ongoing battle.

Beyond Business: Safeguarding Personal Interactions

While my focus so far has been on professional interactions, the security protocol of layered verification (including challenge-response) is just as necessary to safeguard the sphere of personal interaction. In a world where voice deception is just one iteration of software away, a challenge-response mechanism is a trusty bulwark.

Simplicity in the Face of Complexity

In a nutshell, as we navigate evolving digital threats, it's of paramount importance to safeguard our communications. In the battle against deep fake voices, and in an era of complex digital threats, sometimes the best defense remains a simple question.