Neural Networks

Welcome to the World of Neural Networks

From the boardroom to the classroom, the buzz around artificial intelligence and machine learning grows louder every day. As a dedicated explorer in this vast field, I find myself at the crossroads of curiosity and knowledge, addressing questions that range from the fundamentally practical to the deeply philosophical. Among these, queries about neural networks often take center stage: What exactly are neural networks? How do they function? And what distinguishes supervised learning from unsupervised learning?

Neural networks might sound like a complex topic, but this track is designed to make them easy to understand for everyone, no matter your background. You don't need to be a tech expert. We’ll start with the basics and explore what neural networks are. We'll also look at how they learn — sometimes on their own (that's 'unsupervised learning') and sometimes from things we teach them ('supervised learning'). We’ll discuss how these networks are a bit like our brains, and why they're so important in the world of technology.

We’ll use straightforward examples, like sorting a bunch of coins, to explain some of these ideas. We'll also dive into real-world applications of neural networks, why they need powerful computers to learn, and how they get better over time with feedback.

By the end of this track, you’ll not just understand what neural networks are and how they function, but also appreciate their significance in our daily lives. This track is for everyone – whether you're in business, a student, or just curious about technology. Let’s embark on this fascinating journey together and discover one of the most exciting aspects of modern technology!

Introduction to Neural Networks

In the world of artificial intelligence (AI), the term 'neural networks ' often emerges in conversations about machine learning. But what are these neural networks? In the simplest terms, they're a set of algorithms designed to recognize patterns, much like the pattern recognition that occurs in the human brain. Today, businesses leverage neural networks to streamline complex tasks, ranging from sorting vast datasets to conducting predictive analyses.

What do Neural Networks Look Like?

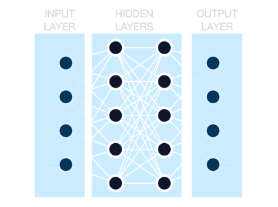

Understanding the basic structure of neural networks can demystify some of the magic behind AI-driven solutions and strategies that are transforming industries around the globe. They're not just theoretical constructs but practical tools driving innovation, efficiency, and competitive advantage. At its core, a neural network is an inspired mimic of the human brain's function, designed to recognize patterns and solve problems. But unlike the organic spontaneity of human neurons, these digital counterparts are meticulously organized.

Training Neural Networks: How AI Learns to Learn

In the world of machine learning, 'training' a neural network is akin to coaching a new employee through the intricacies of sorting coins—a task that can be approached in two distinct ways: supervised and unsupervised learning. To grasp these concepts, it's crucial to understand 'labels.' Imagine each coin has a tag specifying its year of minting—that tag is a 'label.' It gives clear information about the coin, which can be used to guide the sorting process.

Why Neural Networks' Conclusions Are A Black Box

This is a question that comes up a lot. It makes sense to ask; we are conditioned to think of computers and programs as being entities that follow very specific logic flows, capable of generating detailed records about the paths they take while performing operations. Yet, this is not so in the realm of neural networks. Why? Because once trained and operational, they function in many ways similar to the human brain.

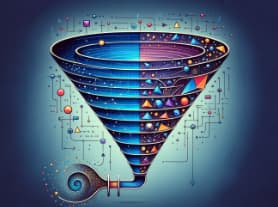

Autoencoders - Simplifying Complex Data

Autoencoders are a class of neural networks with a somewhat counterintuitive task: they are designed to replicate their input data to their output. This replication, however, isn't the main objective. The true 'magic' of autoencoders unfolds in the process of data compression, where the network distills the input into a lower-dimensional, compact representation — much like boiling down a sprawling article into a handful of key bullet points. This ability to abstract the essence of the data is realized through a two-part process: encoding and decoding.