What do Neural Networks Look Like?

In this part of the series, we’re going to answer the question, “What do neural networks look like?” We'll explore the architecture of neural networks—what they look like beneath the hood and how their intricate design enables them to process information and learn from it.

Imagine a digital brain where each thought or memory is connected by a vast web of pathways, much like the neurons and synapses in our own minds. This is the essence of a neural network, a series of interconnected nodes, or 'neurons,' that work together to solve problems, recognize patterns, and make decisions.

Understanding the basic structure of neural networks can demystify some of the magic behind AI-driven solutions and strategies that are transforming industries around the globe. They're not just theoretical constructs but practical tools driving innovation, efficiency, and competitive advantage.

At its core, a neural network is an inspired mimic of the human brain's function, designed to recognize patterns and solve problems. But unlike the organic spontaneity of human neurons, these digital counterparts are meticulously organized.

The Building Blocks

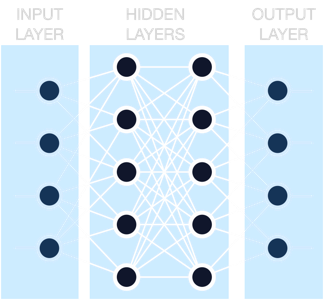

In the following illustration, you'll notice several circles arranged in columns. These are akin to the neurons. They're called nodes or units, and they serve as the basic processing elements of the neural network. The lines connecting them? Those are the synapses, albeit in digital form – the pathways along which information travels.

The first column on the left represents what we call the input layer. Here, the network receives data from the outside world. It could be anything from the pixels of a digital image to the fluctuating prices of stocks.

Moving inward, we encounter what's known as the hidden layers. The term 'hidden' doesn't denote secrecy but rather an abstraction from the user. These layers are the neural network's "thought process," where the actual processing happens. They analyze the input through mathematical functions, each layer delving deeper and uncovering more intricate details or patterns.

Finally, on the far right, we reach the output layer. This is where the neural network presents its findings — whether it's identifying a face in an image, translating a sentence, or predicting a market trend.

Technically, the above image represents an Artificial Neural Network (ANN), specifically a feedforward type known as a Multilayer Perceptron (MLP). In this feedforward structure, data moves in a single direction: forward from the input nodes, through any hidden nodes, to the output nodes. There are no cycles or loops, ensuring a straightforward flow of information. The MLP is a foundational form of ANN characterized by a fully connected architecture, meaning each neuron in one layer is connected to every neuron in the next. Such networks are particularly effective for tabular data, as well as classification and regression tasks where the spatial and temporal relationships within the data are not a primary concern and a transformer architecture is not being used.

The Dance of Algorithms

It's crucial to note that the beauty of a neural network lies not just in its structure but in its ability to learn. Through a process called training, the network adjusts the weights of the connections (those lines in the image) between nodes based on the input data it receives. This is akin to strengthening or weakening synaptic connections in the human brain.

Why Use Neural Networks?

Neural networks shine in tasks that are easy for a human but challenging for traditional computer programs, such as image and speech recognition, natural language processing, and complex decision-making. They don't require explicit programming to make complex decisions, making them incredibly powerful for a wide range of applications.

Simplicity to Complexity

The depicted neural network, while seemingly complex, is actually a somewhat simplified representation of what exists out there. Real-world neural networks can have hundreds of layers and millions of nodes. But regardless of size, the fundamental principles remain the same.

As we journey through the layers of neural networks, it becomes apparent that they are not only powerful but also adaptable instruments. The network diagram we've discussed provides a window into how AI perceives, processes, and interprets the world around us—turning raw data into actionable insights. With each node and connection fine-tuned through experience, neural networks stand at the forefront of technological evolution, continuously learning and evolving.